With the ever increasing digital methods of capturing traffic and driving customer orders, the question often arises on the efficacy of catalog or postal marketing to savvy digital customers. The rational being that they are digital customers, they are engaged with the brand online and that the digital marketing in place will drive or capture their demand. In theory it is a sound argument and for some companies, born from the internet, this could be the case. In others, who have deep roots in postal marketing, this may or may not be the case. Only a well-developed test would answer the question definitively and would be the recommended course if any change in marketing was being contemplated.

This very question came up last year with a CohereOne client, who built their company on catalog before Al Gore had even invented the internet. The client’s thought was that their customer had transitioned from a catalog customer to a digital customer, and that if they could fire on all cylinders with their digital marketing programs, they could save millions in catalog costs. We obviously cautioned against making any wholesale changes strictly based upon beliefs, so we took on the challenge of executing a test to identify if there was a segment of their customer file that would not degrade if pulled out of the catalog contacts.

The traditional segmentation of the customer file is based upon a weighted RFM+ scoring methodology that grouped the customer file into demi-deciles. In order to mine the “hyper-digital” customers we had to come up with a different scoring methodology with the digital data available to us. The new scoring methodology still consisted of weighing recency and frequency within the last 12 months, but also incorporated (at a higher weight) the digital acquisition source, channel of last purchase, email behavior and current online behavior through the NaviStone scoring methodology. Accordingly, the top group of customers in the new score are hyper-digital buyers and therefore should not need a postal contact in order to continue buying, right? WRONG!

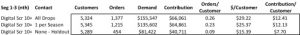

This individual test was run over three seasons, representing 8 months of orders and consisted of equal groups pulled out of the top 3 traditional scoring segments and had the highest score under the new digital scoring. One group received all catalog contacts, one group only received the first contact of the season, and another was held out completely. In theory, the hold out group should perform at the same level as the other two, or at least not justify the additional expense of a catalog contact.

As is abundantly clear, the hyper-digital customers did buy at a decent rate of over $15 per customer, but those who received the catalog contacts doubled that. In the chart above, contribution is taking into account product cost of goods and the catalog cost. Had management acted upon their theory and rolled out without testing, it would have been disastrous. That is why we test: the numbers don’t lie. There were several layers to this test, in both lower digital score and lower traditional score panels, which trended the same way supporting the efficacy of the catalog program in conjunction with the existing digital programs.

One additional piece of information we could glean from the test was the low incremental value we were seeing in the “all drops” group over the “one per season” group. On the surface it would look as if we could simply reduce the number of contacts and not lose much incrementally. But upon a deeper dive into the data we could pinpoint one season that was skewing performance down, therefore overall it was still justified to proceed with the every drop strategy.

The takeaway here is that while in theory something sounds legitimate, it is best practice to test before implementing large changes.

If you’ve got ideas you want to test, contact me at TSeaton@cohereone.com.